The best way to learn anything is by using scenarios, so my main motto of delivering the knowledge is by creating scenarios and explaining concepts through it. so please bear with me for some time.

Here comes the story:

While migrating the data from **On-Prem DB **to any Cloud-DWH, the first thing we need to have in hand is the structure of tables.

Because it is the only way that we can load the data into it. So in this particular example, i have my source system columns in an excel sheet, and i need to generate, the DDL's based on the rows present in it.

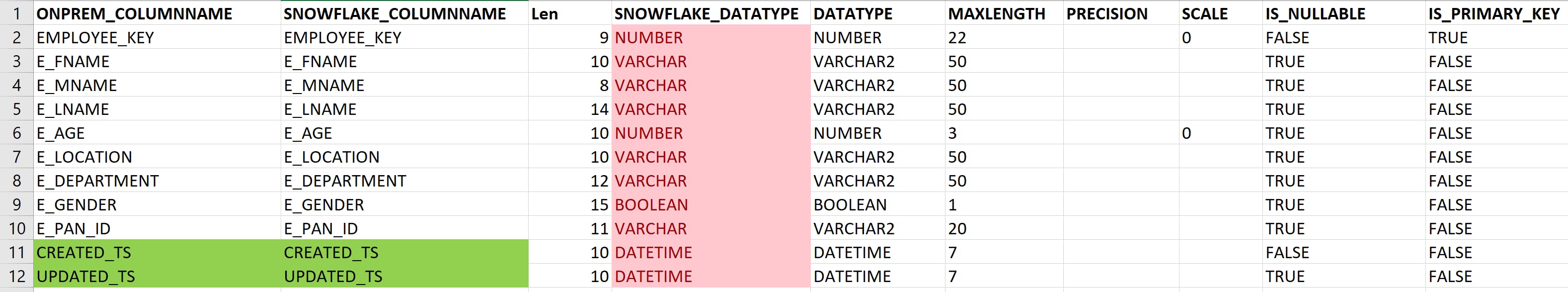

Below is the sample table that depicts the on-prem table schema in an excel sheet:

Scenario : Recently, my team lead has instructed me to generate the DDL's manually !

Instantaneous Feeling :

Obviously, when you need to generate the DDLs for the dimension and fact tables which are hundreds in count it feels, copious and monotonous work isn't it .

My feeling:

Finally, after some brainstorming !

Really, automation works perfect to solve this problem, So i have figured to solve this problem, using pandas.

So here is the code that i have used, to generate the DDL'S.

import pandas as pd

import math

# PASSING THE PATH OF THE EXCEL FILE TO THE PANDAS

xls = pd.ExcelFile('C:\\file_path\\onprem_ddls.xlsx')

# GETTING THE FILE NAMES AS A LIST

sheet_names = list(xls.sheet_names)

# LOOPING ALL THE SHEET_NAMES

for table_name in sheet_names:

df = pd.read_excel(xls, table_name)

# ONPREM TO SNOWFLAKE DATATYPE MAPPING DICTIONARY

data_type = {'VARCHAR2': 'VARCHAR',

'TIMESTAMP(9)': 'DATETIME',

'TIMESTAMP(6)': 'DATETIME',

'DATE': 'DATE',

'NUMBER': 'NUMBER',

'MONEY': 'NUMBER(10,2)',

'BOOLEAN': 'VARCHAR(1)',

'DATETIME': 'DATETIME'}

load_ddls = open('onprem_to_snowflake.sql', 'a')

ddl_builder = "CREATE OR REPLACE TABLE "

ddl_builder += table_name + " ( "

df.reset_index(inplace=True)

# SLICE THE REQUIRED COLUMNS TO BUILD THE DDL

data = df[['SNOWFLAKE_COLUMNNAME', 'DATATYPE', 'MAXLENGTH',

'PRECISION', 'SCALE', 'IS_NULLABLE', 'IS_PRIMARY_KEY']]

# GET THE COLUMNS FROM THE DATA FRAME AS A SINGLE ROW AND ASSIGN THEM TO A VARIABLE

for col_name, data_types, length, precision, scale, is_nullable, check_primary, in data.itertuples(index=False):

if length == 1:

if data_types == 'BOOLEAN':

if check_primary:

ddl_builder += col_name + " " + data_type[data_types] + " PRIMARY KEY NOT NULL " + " ,"

elif is_nullable:

ddl_builder += col_name + " BOOLEAN " + " NOT NULL " + " ,"

else:

ddl_builder += col_name + " " + data_type[data_types] + " ,"

else:

if check_primary:

ddl_builder += col_name + " VARCHAR(1)" + " PRIMARY KEY NOT NULL "+" ,"

elif is_nullable:

ddl_builder += col_name + " VARCHAR(1) " + " NOT NULL " + " ,"

else:

ddl_builder += col_name + " VARCHAR(1)" + " ,"

elif length == 2:

if check_primary:

ddl_builder += col_name + " VARCHAR(2) " + " PRIMARY KEY NOT NULL " + " ,"

elif is_nullable:

ddl_builder += col_name + " VARCHAR(2) " + " NOT NULL " + " ,"

else:

ddl_builder += col_name + " VARCHAR(2) " + " ,"

elif length > 2: #IF THE SOURCE DATA TYPE LENGTH IS GREATER THAN 2 THEN THE BELOW CODE WILL EXECUTE.

if data_types not in data_type:

print(data_types)

elif data_types == 'NUMBER':

if math.isnan(precision) or math.isnan(scale):

if check_primary:

ddl_builder += col_name + " " + data_type[data_types] + "("+str(int(length)) + "," + str(0)+")" + " PRIMARY KEY NOT NULL "+" ,"

elif is_nullable:

ddl_builder += col_name + " " + data_type[data_types] + "("+str(int(length)) + "," + str(0)+")" + " NOT NULL " + " ,"

else:

ddl_builder += col_name + " " + data_type[data_types] + "("+str(int(length)) + "," + str(0)+")" + " ,"

else:

if check_primary:

ddl_builder += col_name + " " + data_type[data_types] + "("+str(int(precision)) + "," + str(int(scale))+")" + " PRIMARY KEY NOT NULL " + " ,"

elif is_nullable:

ddl_builder += col_name + " " + data_type[data_types] + "("+str(int(precision)) + "," + str(int(scale))+")" + " NOT NULL " + " ,"

else:

ddl_builder += col_name + " " + data_type[data_types] + "("+str(int(precision)) + "," + str(int(scale))+")" + " ,"

elif data_types == 'VARCHAR2':

if check_primary:

ddl_builder += col_name + " " + data_type[data_types] + "("+str(int(length))+")" + " PRIMARY KEY NOT NULL "+" ,"

elif is_nullable:

ddl_builder += col_name + " " + data_type[data_types] + "("+str(int(length))+")" + " NOT NULL " + " ,"

else:

ddl_builder += col_name + " " + data_type[data_types] + "("+str(int(length))+")" + " ,"

else:

if check_primary:

ddl_builder += col_name + " " + data_type[data_types] + " PRIMARY KEY NOT NULL " + " ,"

elif is_nullable:

ddl_builder += col_name + " " + data_type[data_types] + " NOT NULL " + " ,"

else:

ddl_builder += col_name + " " + data_type[data_types] + " ,"

load_ddls.write(ddl_builder[:len(ddl_builder)-1]+');')

load_ddls.close()

ddl_builder = ""

The Code once Executed, will generate a file that has all the DDls in it.

Yeah Kudos, we have successfully automated the process of generating the ddl for a single table, in the same way if you have 100's of tables also you can automate that with this simple script.

Sample output:

CREATE OR REPLACE TABLE EMP_DIMENSION (

EMPLOYEE_KEY NUMBER(22,0) PRIMARY KEY NOT NULL,

E_FNAME VARCHAR(50) ,

E_MNAME VARCHAR(50) ,

E_LNAME VARCHAR(50) ,

E_AGE NUMBER(3,0) ,

E_LOCATION VARCHAR(50) ,

E_DEPARTMENT VARCHAR(50) ,

E_GENDER VARCHAR(1) ,

E_PAN_ID VARCHAR(20) ,

CREATED_TS DATETIME ,

UPDATED_TS DATETIME );

In this way for any dimension or fact tables, you can simply generate the ddls by using the above python script. I hope you have liked my approach of explaining the things, If yes please press the like button, Which will boost me to write more and more articles like this. Until then Signing off as Naveen Vadlamudi

Thank you for reading this.