Data Engineer an Unsung Hero!

Approximately the work done by a data engineer is enormous and copious. if you ever worked in any data project the maximum amount of effort is invested in setting up the data pipelines which involve extracting, integrating, and ordering the data from the source systems.

So today I will give an introduction to the technical terms that are used by a data engineer.

Note : Please Sabar karo, aur deere dere pado !

1) Job

Typical Data Engineer interview

My definition: A Job is a Directed Acyclic Graph (DAG) that contains multiple components, which perform a unit operation in an ETL process, that includes connection initialization, data extraction, data transformation, data load, etc.

Mainly, there are 2 classifications in jobs,

a) Historic Job / One-Time Load

b) Incremental Job / Day-by-Day Load

a) Class -1 ---> Historic Job :

Historic job is also identified as a one-time load that transfers the entire data from the source system to the target system, only once.

Example: Assume a source system Table X, which contains a billion records, now your goal is to transfer the entire billion records to the cloud data warehouse. This consumes huge time and effort in the process, so you would set up a data pipeline and will trigger the historic job, which will load the entire data from the on-prem system to the target system, at once.

So from the next day, you could just transfer the records that are arrived on the previous day.

b) Class -2 ---> Incremental Load: Incremental Load is defined as a process, which loads the data from the source system, continuously in a time-defined intervals like for every day or 2 days, or a week etc.

2) Slowly Changing Dimensions: (SCD - {1,2,3,4,6} ) These patterns helps us to track the changed data, in the dimension table.

3) Orchestration Job: An Orchestration Job is a Job whose sole responsibility is to orchestrate the execution of other Jobs, that is, to execute a Job, wait for it to finish, execute the next Job on successful completion, or handle errors should the Job fail.

Definition source: talendframework.com/orchestration-jobs

4) Transformation Job: An Transformation Job is a job whose sole responsibility is to transform the data on the fly, by performing modifications on it.

5) Design Patterns in Data Engineering :

Data Engineering has many Design Patterns .....

So here are the following.

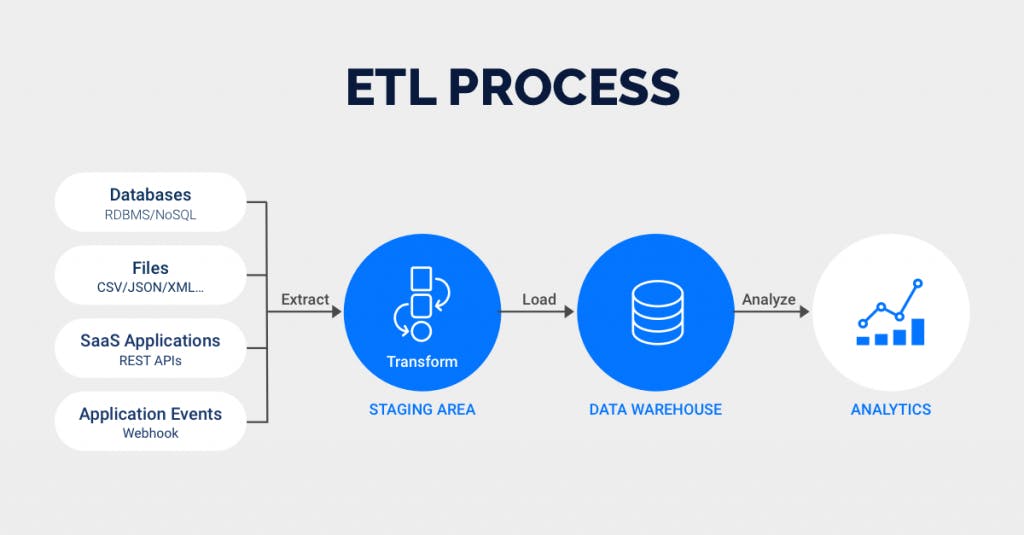

A) ETL: (Extract Transform Load) Is a plain old design pattern in data engineering where the data from the source system is extracted, Transformed, and then Loaded into the target system as shown in the below diagram.

image source:

rivery.io/blog/etl-vs-elt-whats-the-differe..

image source:

rivery.io/blog/etl-vs-elt-whats-the-differe..

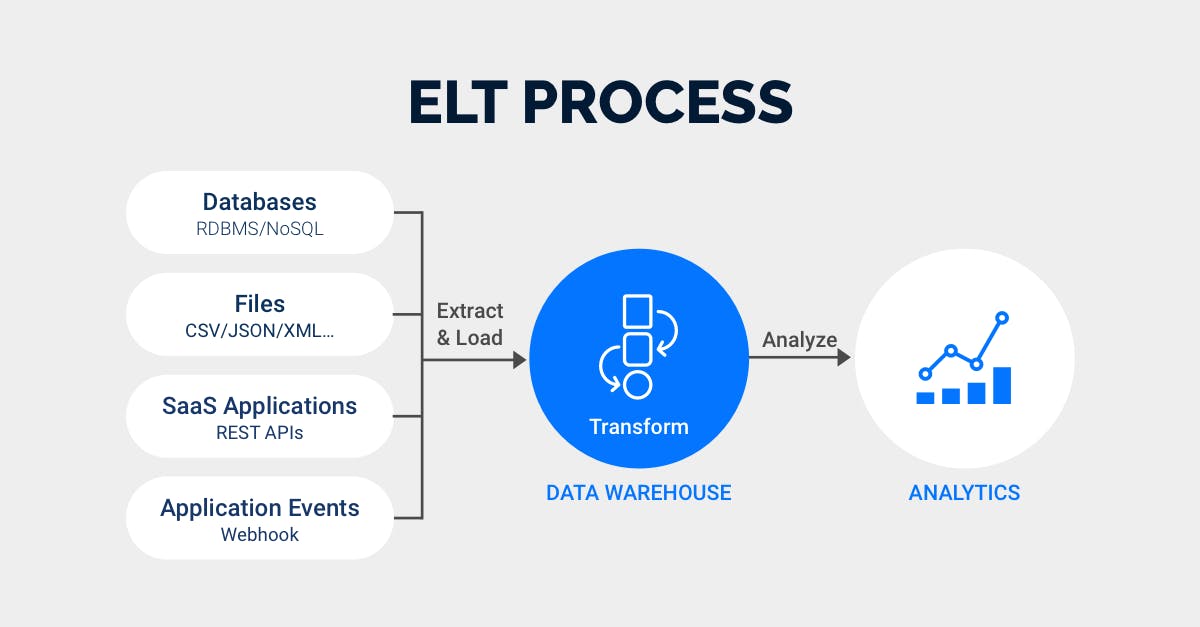

B) ELT: (Extract Load Transform) Extract Load Transform is the most ubiquitous and highly scalable design pattern, which has gained much popularity due to the rise of cloud technologies and compute power. So currently most data projects that are implementing solutions are using the ELT pattern.

image source:

rivery.io/blog/etl-vs-elt-whats-the-differe..

image source:

rivery.io/blog/etl-vs-elt-whats-the-differe..

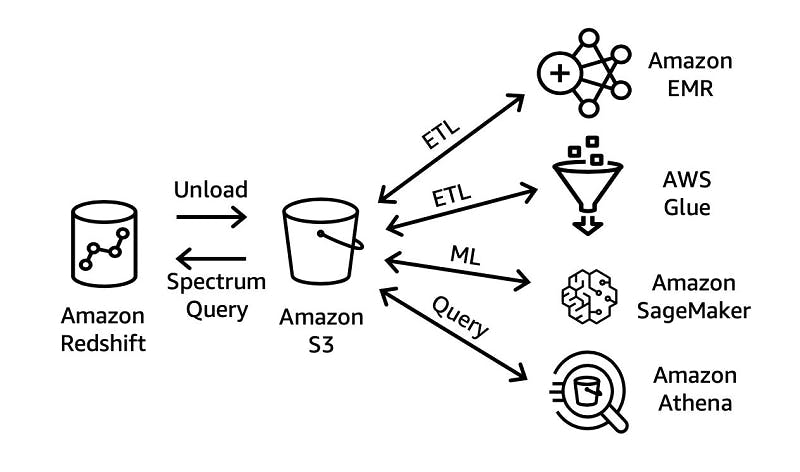

C) EtLT: (Extract Transform Load Transform) In the EtLT, design pattern, look at the diagram mentioned below, where data security is the highest priority, so we mask the data while unloading it to S3, which includes personally identifiable information or any medical information. So Once the data is on S3, it means we have completed the EtL part i.e Extract, Transform, Load, Now we can perform the Transformation and load the data into the destined Data Warehouse for any other Further Analysis.

image source: aws.amazon.com/blogs/big-data/etl-and-elt-d..

image source: aws.amazon.com/blogs/big-data/etl-and-elt-d..

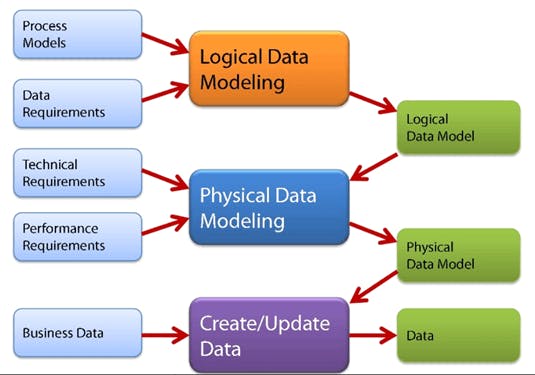

6) Data Modelling: Data modeling is the process of creating an ER-Diagram of either a whole information system or parts of it to communicate connections between data points and structures. Which involves an iteration of the system requirements, by generating the conceptual, logical, and physical models.

Definition Source : ibm.com/cloud/learn/data-modeling

image source: guru99.com/data-modelling-conceptual-logica..

According to my understanding these, are the basic and the most important technical terms that needs to be understood by every data engineer.

So once again if you like my way of explaining things, please hit the like button, because it gives me a sense of appreciation. Until then take care and be happy.